Launching the Web server and the Python Interpreter On the top of the Docker Container

Launching the Docker container and running the Application on top of the container is one of the use cases or one of the important things we learn in the last blog. You can refer to the blog by using this link.

Let's see more about docker

What is Docker ???

Docker is a tool designed to make it easier to create, deploy, and run applications by using containers. Containers allow a developer to package up an application with all of the parts it needs, such as libraries and other dependencies, and deploy it as one package. By doing so, thanks to the container, the developer can rest assured that the application will run on any other Linux machine regardless of any customized settings that the machine might have that could differ from the machine used for writing and testing the code.

In a way, Docker is a bit like a virtual machine. But unlike a virtual machine, rather than creating a whole virtual operating system, Docker allows applications to use the same Linux kernel as the system that they're running on and only requires applications to be shipped with things not already running on the host computer. This gives a significant performance boost and reduces the size of the application.

And importantly, Docker is open source. This means that anyone can contribute to Docker and extend it to meet their own needs if they need additional features that aren't available out of the box.

Docker provides tooling and a platform to manage the lifecycle of your containers:

Develop your application and its supporting components using containers.

The container becomes the unit for distributing and testing your application.

When you’re ready, deploy your application into your production environment, as a container or an orchestrated service. This works the same whether your production environment is a local data center, a cloud provider, or a hybrid of the two.

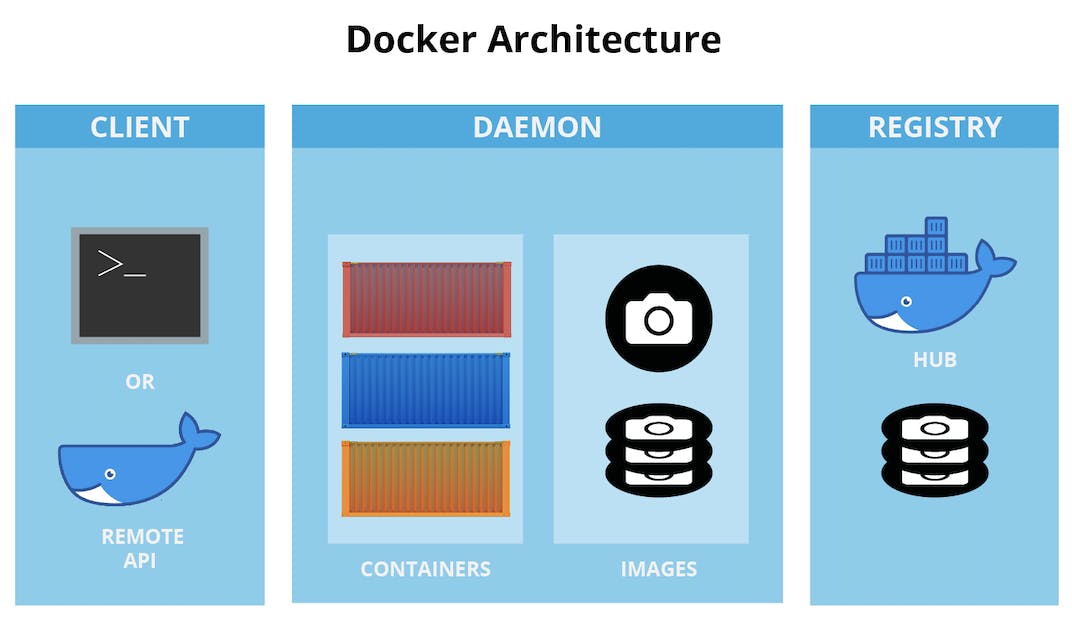

Docker Architecture

The architecture of Docker consists of Client, Registry, Host, and Storage components. The roles and functions of each are explained below.

Docker’s Client

The Docker client can interact with multiple daemons through a host, which can stay the same or change over time. The Docker client can also generate a command-line interface (CLI) to send commands and interact with the daemon. The three main things that can be controlled and managed are Docker build, Docker pulls, and Docker run.

Docker Host

A Docker host helps to execute and run container-based applications. It helps manage things like images, containers, networks, and storage volumes. The Docker daemon is a crucial component, performs essential container-running functions and receives commands from either the Docker client or other daemons to get its work done.

Docker Objects

Images

Images are nothing but containers that can run applications. They also contain metadata that explains the capabilities of the container, its dependencies, and all the different components it needs to function, like resources. Images are used to store and ship applications. A basic image can be used on its own but can also be customized for a few reasons including adding new elements or extending its capabilities.

You can share your private image with other employees within your company using a private registry, or you can share the image globally using a public registry like Docker Hub. The benefits of the container are substantial for businesses because it radically simplifies collaboration between companies and even organizations– something that had previously been nearly impossible before!

Containers

Containers are sort of like mini environments in which you run applications. And the great thing about containers is that they contain everything needed for every application to do its job in an isolated environment. The only things that a container can access are the ones that are provided. So, if it’s an image, then images would be the only sort of resource that a container would have access to when being run by itself.

Containers are defined by the image and any additional configuration options provided during the start of the container, including and not limited to network connections and storage options. You can also create a new image based on the current state of a container. Like how containers are much more efficient than virtual machine images, they are spun up within seconds and give you much better server density.

Networks

Docker networking is a passage of communication among all the isolated containers. There are mainly five network drivers in docker:

Bridge: It is the default network for containers that are not able to communicate with the outside world. You use this network when your application is running on standalone containers, i.e., multiple containers in a network that only lets them communicate with each other and not the outside world.

Host: This driver allows for seamless integration of Docker with your resources running on your local machine. It relies on the native network capabilities of your machine to provide low-level IP tunneling and data link layer encryption between the Docker apps running on different endpoints.

Overlay network: This is a type of software-defined networking technology that allows containers to communicate with other containers. To connect it to an external host, first, you must create a virtual bridge on one host and then create an overlay network. You’ll also need to configure the overlay network and grant access from one side to the other. A “none” type driver usually means that the networking is disconnected.

Macvlan: To assign an address for containers and make them similar to that of physical devices, you can use the Macvlan driver. What makes this unique is that it routes traffic between containers through their associated MAC addresses rather than IPs. Use this network when you want the containers to look like physical devices, for example during the migration of a VM setup.

Storage

When it comes to securely storing data, you have a lot of options. For example, you can store data inside the writable layer of a container, and it will work with storage drivers. The disadvantage of this system could be risky since if you turn off or stop the container then you will lose your data unless it is committed somewhere else. With Docker containers, you have four options when it comes to persistent storage.

Data volumes: They provide the ability to create persistent storage, with the ability to rename volumes, list volumes, and list the container that is associated with the volume. Data Volumes are placed on a data store outside the container’s copy-on-right mechanism – either in S3 or Azure- and this is what allows them to be so efficient.

Volume Container: A Volume Container is an alternative approach wherein a dedicated container hosts a volume, and that volume can be mounted or symlinked to other containers. In this approach, the volume container is independent of the application container and therefore you can share it across multiple containers.

Directory Mounts: A third option is to mount a host’s local directory into the container. In the previous cases, the volumes would have to be within the Docker volumes folder, whereas for directory mounts any directory on the Host machine can be used as a source for the volume.

Storage Plugins: Storage plugins provide Docker with the ability to connect to external storage sources. These plugins allow Docker to work with a storage array or appliance, mapping the host’s drive to an external source. One example of this is a plugin that allows you to use GlusterFS storage from your Docker install and map it to a location that can be accessed easily.

Docker’s Registry

Docker registries are storage facilities or services that allow you to store and retrieve images as required. For example, registries are made up of Docker repositories that store your images under one roof (or at least in the same house!). Public Registries include two main components: Docker Hub and Docker Cloud. Private Registries are also fairly common among organizations.

Let's do the implementation

Step 1 : For using the docker first we have to install it by using the command " yum install docker "

Step 2: we have to check whether the docker software has been installed or not by checking its version

Step 3: For using the docker service, first we have to start it by using the command

"systemctl start docker "

And then we have to check whether the service has been started or not by using the command

"systemctl status docker "

I HAVE COMPLETED STEP 2 AND STEP 3 in the ABOVE SCREENSHOT YOU CAN SEE THAT

Step 4: Now after installing the docker. We have to pull the image for installation of the docker container

Step 5: Launch the container by using the command

" docker run -it --name 'name_of_container' 'image_name' "

Step 6: Now install the httpd software on top of the newly launched container

By using the docker ps -a command. You can see that my container named Task_7 has been launched about a minute ago. "docker ps -a" gives the list of Containers which is launched and which were launched at some point in time.

Step 6: Now install the httpd software on top of the newly launched container

Now go to the directory cd /var/www/html/. Create the HTML file Task_7.html by using the command " vim Task_7.html " and then read the file by using the " cat Task_7.html " command

Now check the IP of the container by using the command " ifconfig "

Now run the command to see the webpage from the base OS

" curl http://Containerip/Webpage.html "

Note :- "This link is not currently as we launch the container it will start automatically and after stop or kill the container it will stop working."

As you can see the httpd server has been configured successfully and it's working fine !!!

!!!!!!! Setting up the Python Environment !!!!!!!

Step 1: Install the Python software using the command given below:

" yum install python3 "

Step 2: Now run the " python3 " command to get the python interpreter and then run any code to check whether the python is working well or not

That's all for this blog and stay tuned for more Docker tutorials and more such tech. Make sure to subscribe to our newsletter.

Thank you for Reading:)

#Happy Reading!!

Any query and suggestion are always welcome - Nehal Ingole