Table of contents

Need for Container Orchestration

Imagine running an application having multiple services communicating with each other and running inside a container. Hey also remember this is a crucial application for your business so its downtime in any way may lead to business loss.

Now, this is a containerized application so can be scaled with a few commands given to our container platform. Also in the real world, these services running in a container need to be scaled up to hundreds of containers. This is where the real challenge begins now we have to take care of whether all those containers are working properly or not, whether they are communicating properly or facing any issues, etc. manually doing all these things seems to be very challenging that's where the role of Container Orchestration tools comes into the picture.

What is Container Orchestration?

Container Orchestration is all about the management of the lifecycle of containers. Container orchestration tools help to manage and automate tasks like container scaling, deployment, network management, health monitoring, and ensuring the high availability of the application.

There are multiple container orchestration tools available in the market today like Docker swarm, Apache Mesos, Amazon elastic container service (ECS), etc. But out of all these today, "Kubernetes is the most popular container orchestration tool".

What is Kubernetes?

Kubernetes is a portable, extensible, open-source platform for managing containerized workloads and services, that facilitates declarative configuration and automation. It is often styled as K8s.

K8s was Originally designed by Google, and the project is now maintained by the Cloud Native Computing Foundation.

What Kubernetes provides us?

Service discovery and load balancing Kubernetes can expose a container using the DNS name or using their own IP address. If traffic to a container is high, Kubernetes is able to load balance and distribute the network traffic so that the deployment is stable.

Storage orchestration Kubernetes allows you to automatically mount a storage system of your choice, such as local storage, public cloud providers, and more.

Automated rollouts and rollbacks Whenever there are updates to your application, Kubernetes can roll out those changes easily at the same time ensuring high availability. Kubernetes also keeps track of versions so rolling out (shift to new version) or rolling back (shift to older version) becomes easy.

Automatic bin packing You provide Kubernetes with a cluster of nodes (physical computers or servers) that it can use to run containerized tasks. You tell Kubernetes how much CPU and memory (RAM) each container needs Further packages your application and automatically places it based on your requirements in specific nodes such that it makes the best use of your resources

Self-healing Kubernetes restarts containers that fail, replaces containers, kills containers that don’t respond to your user-defined health check, and doesn't advertise them to clients until they are ready to serve.

Secret and configuration management Kubernetes let us store and manage sensitive information, such as passwords, OAuth tokens, and SSH keys. we can deploy and update secrets and application configuration without rebuilding your container images, and without exposing secrets in your stack configuration.

Some Basic terminologies in Kubernetes to get started.

Pod: The Smallest Execution unit in Kubernetes.

- At the end of the day, our services/ program/ application is running over the containers but Kubernetes needs to manage those for us for this they pack these containers in a box along with some metadata for their management by Kubernetes and this box is known as "Pods". If needed we can also have multiple containers running in one single pod.

Nodes: Physical or virtual machine providing resources

Kubernetes cluster is formed with the help of physical or virtual machines/computers that need resources (memory/CPU/storage) to run the pods and other services also their own internal programs. These physical or virtual machines are known as "Nodes"

In k8s we form the cluster with help of worker nodes and master nodes. A master node is a machine where only Kubernetes respective programs are run by default and worker nodes are the ones where the applications demanded by the users are running. It's a master-slave topology

Kubectl: Client-side utility to communicate with Kubernetes.

- Client needs to tell its requirements to Kubernetes for which Kubernetes provides an API gateway to them this is an HTTP REST API. From a technical point of view, we are using kubectl as a client for the Kubernetes API.

Note 📝:

In the real world when we use Kubernetes we deploy applications over a multinode Kubernetes cluster meaning the master node is altogether a separate computer and each worker node is also given a separate computer.

But in our environment, we usually don't have so many resources for each node so the solution is a "single node Kubernetes cluster" where we have the master node and worker node configured in one single machine/ computer.

Setting up Single node Kubernetes cluster on top of Windows OS

Here we are going to set up a single-node Kubernetes cluster using a tool called minikube which can quickly set up a local Kubernetes cluster on Windows. The setup needs around 2 CPUs, 4000 MB of free ram, and 20GB of free disk space.

At the most, we need to install Oracle VirtualBox which will be used by minikube further. Install from here

Make sure the virtualization in your system is on. you can turn it on from the bios [here]

Now we also need to install the kubectl client-side program. we can get it from [here]. If we observe it is only an executable (exe) file so we can use it directly. we will be using it from cmd.

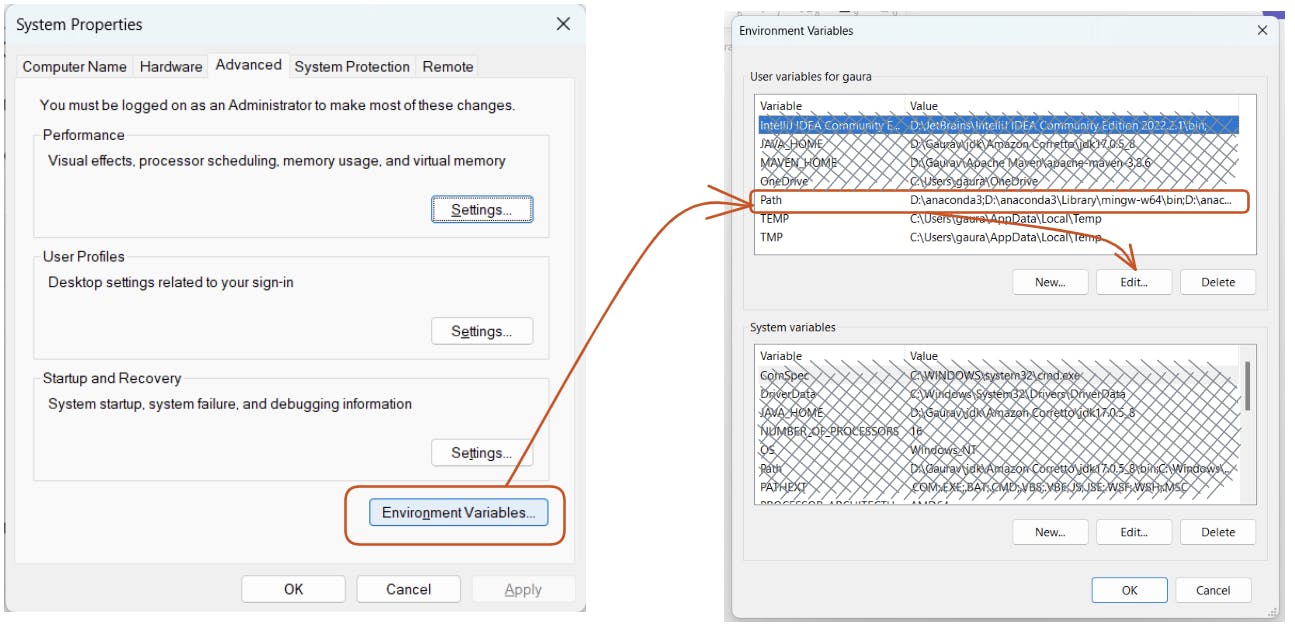

Now we would like to use kubectl from any location for which we need to edit the path environment variable and add a new entry there with the location of where we have downloaded kubectl.exe

Once done we can run kubectl from anywhere

Now the last thing we need to install the minikube installer we can download it from [here]. Once done install it. Now we get the minikube command available

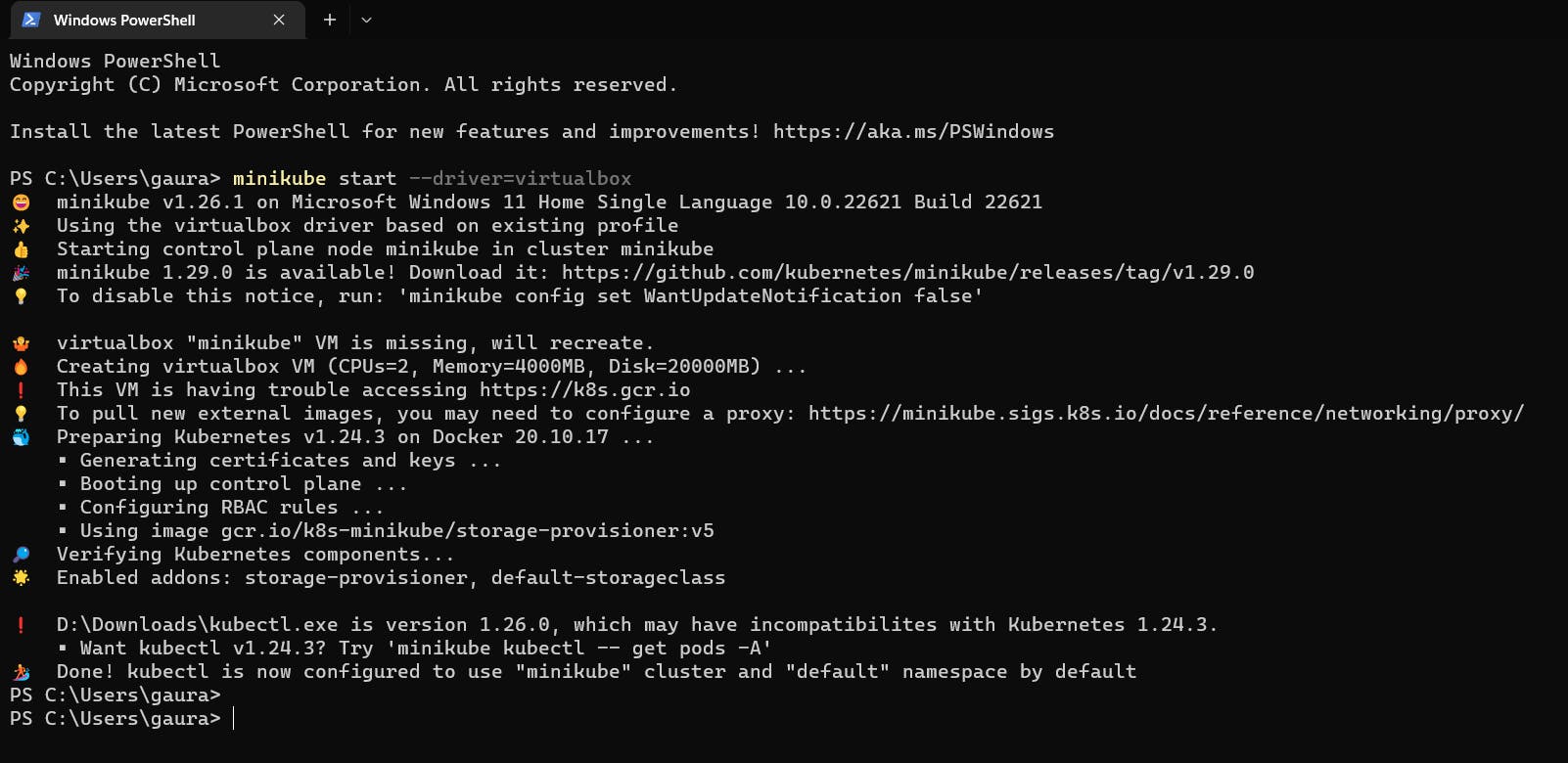

So now to create our single node Kubernetes cluster run the command:

minikube start --driver=virtualbox

Amazing ! our setup is done.

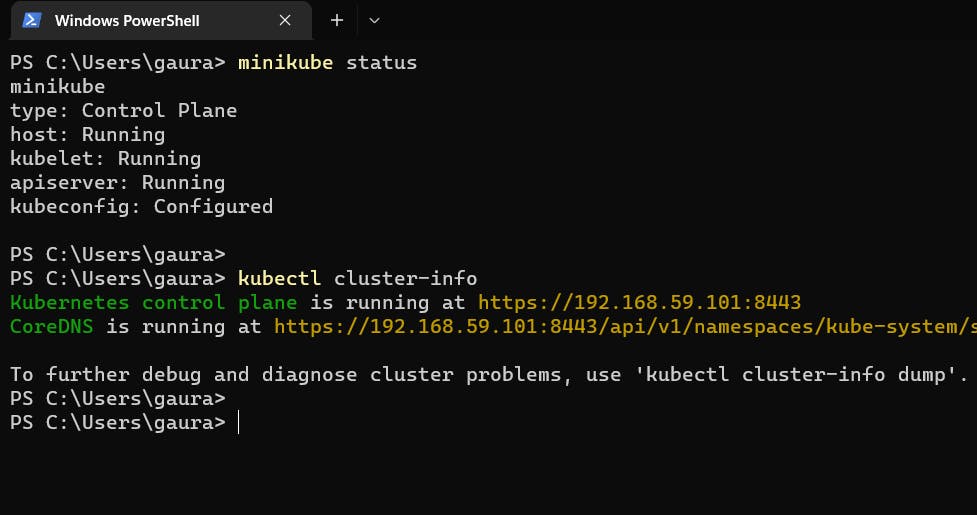

We can also check the status of our cluster and its info with the

minikube statusandkubectl cluster-info.

Launching your first pod on Kubernetes

launching pod in k8s can be done with help of kubectl in 2 ways:

First method: Using commands

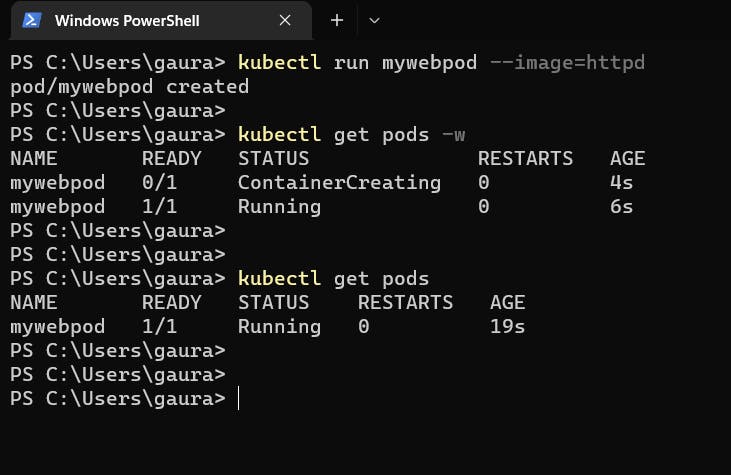

Here we just need to pass the commands using kubectl like -

kubectl run <pod_name> --image=<image_name>

we need to understand the image is fetched by default from dockerhub so if not present in the cluster it will first download it and then launch the pod from it.

To get all the pods use the command

kubectl get podsNote: we don't have to remember all these commands just use the help from kubectl using

kubectl --helpthis will equip us with necessary commands, options, and flags as and when needed

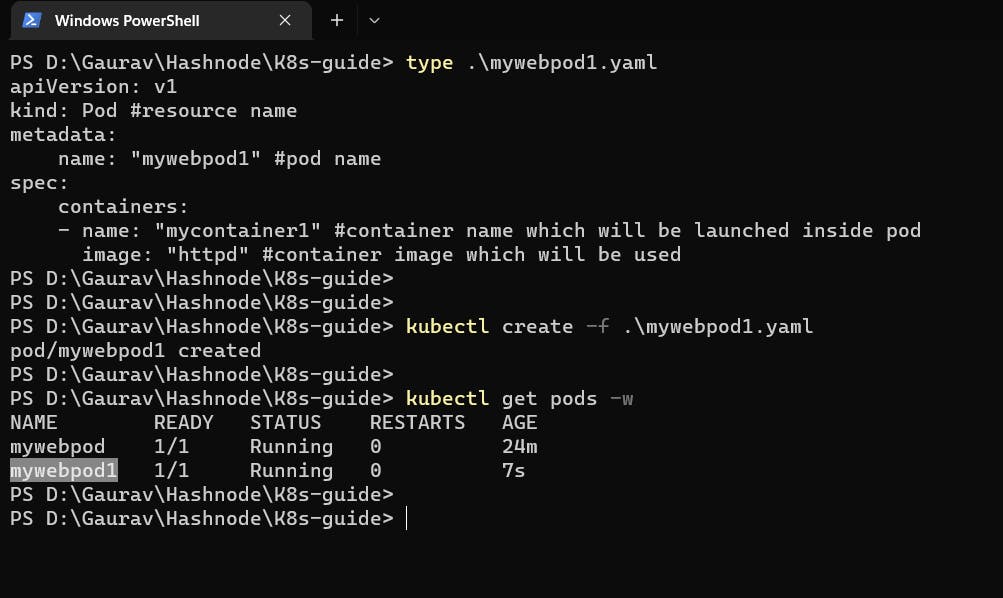

Second Method: Using YAML files

- Using a YAML file to configure something on top of Kubernetes is one of the very common practices. especially for applications involving multiple components/ resources. here we are creating a pod with help of the YAML file and further running using the kubectl create command

apiVersion: v1

kind: Pod #resource name

metadata:

name: "mywebpod1" #pod name

spec:

containers:

- name: "mycontainer1" #container name which will be launched inside pod

image: "httpd" #container image which will be used

Some good-to-know stuff 🥑

Heard of OpenShift? It is a cloud-based Kubernetes platform that helps to deliver a consistent experience across public cloud, on-premise, hybrid cloud, or edge architecture. Openshift not only includes Kubernetes but also more such tools that are needed in the container world so it's a suite of container software.

Redhat Openshift has been a major supporting technology towards 5G and setting up its infrastructure. The containerized workflow is managed and set up on top of redhat open shift. Some of the major problems of 5G like scaling, High availability, etc are solved with the Kubernetes-based container environments within openshift. [here]

That's all for this blog, folks 🙌 Stay tuned for more amazing stuff on Kubernetes and more such tech. Make sure to subscribe to our newsletter. 📫

Thank you For Reading :) #HappyLearning

Any query and suggestion are always welcome- Gaurav Pagare