Hello Techies! Hope you all are doing amazing stuff. I come up with another amazing article regarding NLP. It'll be an overview of how NLP has evolved over the years and how it's moving towards achieving Artificial general intelligence(AGI). Still, a lot to explore🤩. Further, I'll discuss how brief History of NLP, key concepts while working on any NLP projects, Best Materials/Courses to learn NLP faster🚀

Let's get into it!!!

We are constantly shaping our environment to our human needs and this comes in many forms, one of them is making machines understand the most complex and special feature that set us apart from mere giant apes “speech”.

The ability to tell tales of our creative imagination, to express our thoughts, desires, and dreams in speech form is what makes humans different and special. This effortless feature helped us gather in groups of hundreds and in today's era in the order of billions of individuals to work united and push what we perceive has boundaries of the human race — our slogan became: “Sky is the limit”, but it turns out there is no limit because of this ability to share ideas and ideals which allows us to cooperate for a common purpose. We are getting closer to changing that statement to:

The galaxy is the limit”, which in turn given more time not even time will be the limit.

With this line let's start exploring the NLP Galaxy🌌, developed by humans!!!

Let's get started.🚀

Brief Meaning of Natural Language Processing (NLP)

Natural Language Processing (NLP) is an exciting and rapidly growing field of computer science that has the potential to revolutionize the way we interact with technology. NLP is the subfield of artificial intelligence that focuses on giving computers the ability to understand and interpret human language, enabling them to understand instructions and respond to questions. NLP is an important component of Language Modeling (LLM), a set of computational techniques used to analyze and generate text that mimics natural language. From its humble beginnings in the 1950s to its current cutting-edge research, NLP has come a long way, and its future is even brighter.

NLP has many applications, including Language translation, sentiment analysis, text summarization, speech recognition, and question-answering systems. With the help of NLP, computers can understand and interpret human language, enabling them to perform tasks that would otherwise require human intervention. NLP involves the use of techniques from computer science, linguistics, and artificial intelligence, such as machine learning and deep learning, to enable computers to process natural language data.

The Evolution

Natural Language Processing (NLP) has undergone significant evolution over the years. Here is a brief overview of the major milestones in the history of NLP:

Rule-based systems (1950s-1980s): The earliest NLP systems were rule-based, where grammatical rules were manually created to analyze and generate natural language. These systems were limited in their ability to handle ambiguity and variability in language.

Statistical models (1990s-2000s): Statistical models, such as Hidden Markov Models and Conditional Random Fields, emerged as a new approach to NLP. These models learned from data to automatically extract patterns and relationships in language. This led to significant improvements in language processing accuracy.

Machine learning (2000s-2010s): Machine learning algorithms, such as Support Vector Machines and Neural Networks, became popular in NLP. These algorithms enabled NLP models to learn from large datasets and handle complex language tasks such as sentiment analysis, text classification, and machine translation.

Deep learning (2010s-present): Deep learning, which involves training neural networks with many layers, has revolutionized NLP. Deep learning models such as Recurrent Neural Networks and Transformers have achieved state-of-the-art performance on many NLP tasks, including language modeling, machine translation, and text generation.

Pre-training and fine-tuning (2018-present): Pre-training and fine-tuning techniques have been applied to deep learning models to improve their performance on specific NLP tasks. Pre-training involves training a model on a large corpus of text data, and fine-tuning involves further training on specific tasks. This approach has led to the development of advanced models such as GPT-3 and BERT, which have achieved impressive results on a wide range of NLP tasks.

Early Applications Of NLP

NLP has a long history of development, with early applications dating back to the 1950s. Here are some examples of early applications of NLP:

Machine Translation: One of the earliest applications of NLP was in machine translation, which involves automatically translating text from one language to another. The first experiments in machine translation were conducted in the 1950s, using rudimentary rules-based systems.

Information Retrieval: In the 1960s, researchers began to develop techniques for automatic indexing and retrieving documents based on their content. This involved using NLP to extract key terms and concepts from the text, and then using those to index and retrieve documents.

Question-Answering Systems: In the 1970s and 1980s, researchers began developing question-answering systems that could automatically answer questions posed in natural language. These systems used NLP techniques to understand the meaning of the question and retrieve the relevant information from a knowledge base.

Speech Recognition: In the 1980s, researchers began developing speech recognition systems that could convert spoken language into text. This involved using NLP techniques to analyze and transcribe speech and was the precursor to modern voice assistants like Siri and Alexa.

These early applications of NLP laid the groundwork for the development of more advanced NLP systems that we use today.

Statistical Methods Of NLP

Statistical methods have been widely used in Natural Language Processing (NLP) to build models that can understand, analyze, and generate human languages. Here are some examples of statistical methods used in NLP:

Language Modeling: Language models are statistical models that are used to predict the likelihood of a sequence of words. They are often used in speech recognition, machine translation, and text generation. Language models can be built using techniques such as n-gram models, recurrent neural networks (RNNs), and transformer models.

Part-of-Speech Tagging: Part-of-speech (POS) tagging is the process of assigning a grammatical category (such as a noun, verb, or adjective) to each word in a sentence. POS tagging is an essential task in NLP and is used in many applications, such as speech recognition and machine translation. POS tagging can be done using statistical methods such as Hidden Markov Models (HMMs) or Maximum Entropy Markov Models (MEMMs).

Named Entity Recognition: Named Entity Recognition (NER) is the process of identifying and classifying named entities (such as people, organizations, and locations) in text. NER is an important task in information extraction and can be done using statistical methods such as Conditional Random Fields (CRFs) or Support Vector Machines (SVMs).

Sentiment Analysis: Sentiment analysis is the process of analyzing the emotional tone of the text. It is often used to determine the sentiment of customer reviews or social media posts. Sentiment analysis can be done using statistical methods such as Naive Bayes classifiers, Support Vector Machines (SVMs), or neural networks.

Statistical methods have played a significant role in the development of NLP and continue to be a key tool for building advanced NLP systems.

Lifecycle of Any NLP Task

The lifecycle of an NLP task can be broken down into several stages, each with its own set of processes and techniques. Here is a high-level overview of the typical lifecycle of an NLP task:

Data Collection: The first step in any NLP task is to collect and prepare the data. This involves identifying the data sources, gathering the data, and cleaning and pre-processing it to ensure that it is in a usable format.

Data Exploration and Analysis: Once the data has been collected and pre-processed, the next step is to explore and analyze it. This involves using various techniques such as descriptive statistics, data visualization, and text analytics to gain insights into the data and identify any patterns or trends.

Feature Extraction: Feature extraction is the process of identifying and selecting the relevant features from the data that will be used to train the NLP model. This involves using techniques such as tokenization, stemming/lemmatization and part-of-speech tagging to transform the raw text data into a numerical representation that can be used by machine learning algorithms.

Model Selection and Training: Once the features have been extracted, the next step is to select the appropriate machine learning algorithm and train the model. This involves splitting the data into training and testing sets, tuning the model parameters, and evaluating the model performance using various metrics such as accuracy, precision, recall, and F1-score.

Model Deployment: Once the model has been trained and tested, the next step is to deploy it in a production environment. This involves integrating the model into a software application or system and testing it in real-world scenarios to ensure that it is functioning as expected.

Model Monitoring and Maintenance: After the model has been deployed, it is important to monitor its performance and make any necessary updates or changes to ensure that it continues to perform well over time. This involves monitoring the model's accuracy and performance metrics, identifying and addressing any issues or errors, and retraining the model as needed to keep it up-to-date and accurate.

These are the main stages in the lifecycle of an NLP task, and the specific processes and techniques used at each stage will vary depending on the task and the specific data and tools being used.

Recent Advances in NLP

Pretrained Language Models: Pretrained language models such as BERT, GPT, and RoBERTa have had a significant impact on NLP. These models are trained on large amounts of text data and can be fine-tuned for specific NLP tasks, such as language translation or sentiment analysis.

Neural Machine Translation: Neural machine translation (NMT) has made significant progress in recent years, with systems that can translate between multiple languages with high accuracy. NMT uses neural networks to learn the mapping between languages and has been shown to outperform traditional rule-based or statistical machine translation approaches.

Contextualized Word Embeddings: Contextualized word embeddings, such as ELMo and GPT, have been developed to capture the context in which words are used. Unlike traditional word embeddings, which represent words as fixed vectors, contextualized word embeddings vary depending on the context in which they are used. This makes them more powerful for many NLP tasks, such as sentiment analysis and machine translation.

Multimodal NLP: Multimodal NLP involves processing multiple modalities, such as text, images, and audio, to better understand natural language. Recent advances in multimodal NLP have led to systems that can generate captions for images, recognize speech in videos, and even generate images from textual descriptions.

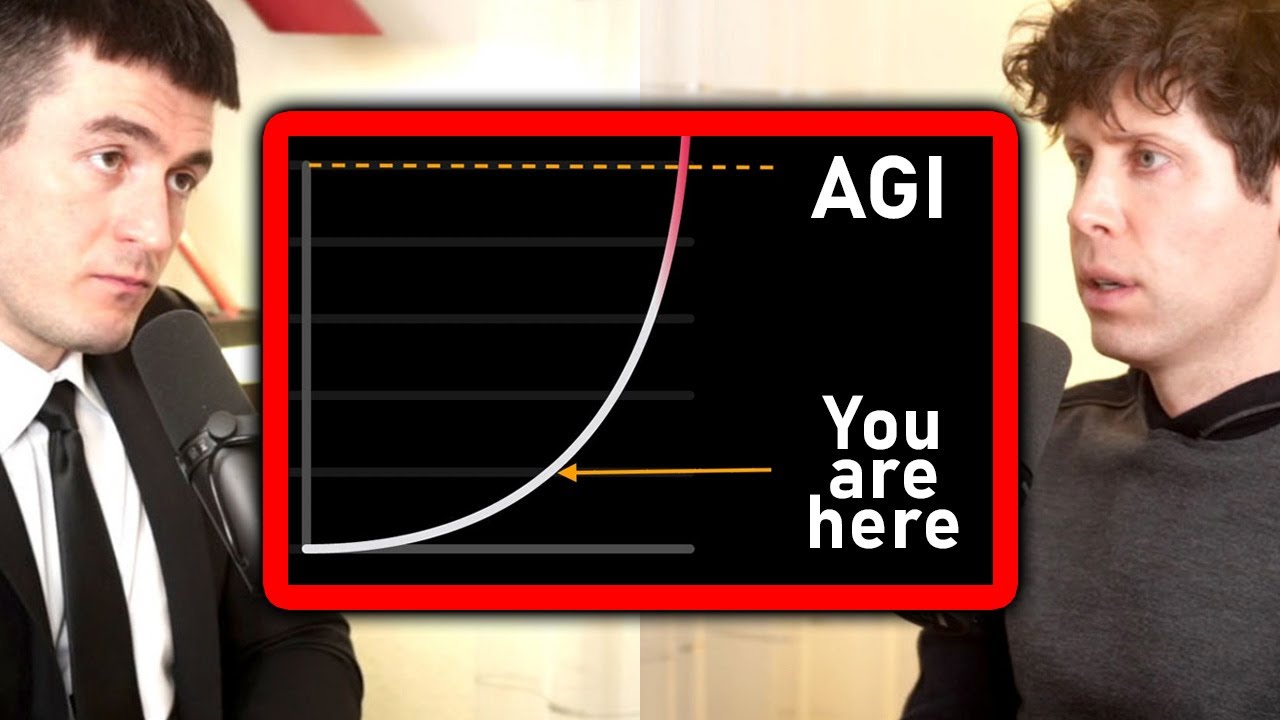

Artificial General Intelligence(AGI): AGI is creating a system that can learn and generalize from a wide range of experiences and knowledge, rather than simply memorizing specific patterns or rules. This requires the development of more sophisticated machine learning algorithms and architectures, as well as the ability to integrate and reason across multiple domains and modalities.

Future of NLP

Continued development of large-scale language models: Recent years have seen a trend towards the development of larger and more complex language models, such as GPT-4, which can perform a wide range of NLP tasks with high accuracy. This trend will likely continue, leading to even larger and more powerful language models that can perform increasingly complex NLP tasks.

Greater focus on context and common sense: While current NLP systems are good at understanding language within a narrow context, they often struggle with understanding the broader context and common sense knowledge. Future NLP systems may place greater emphasis on incorporating contextual information and developing a deeper understanding of common sense knowledge, which could improve their ability to perform more complex tasks.

Advancements in multimodal NLP: As NLP technology continues to mature, there will likely be increasing interest in multimodal NLP, which involves processing multiple modalities, such as text, images, and audio, to better understand natural language. This could lead to new applications of NLP in fields such as virtual reality, robotics, and autonomous vehicles.

Improved ethical and responsible use of NLP: As NLP technology becomes more advanced, there will be increasing concerns about the ethical and responsible use of this technology. Future developments in NLP are likely to be accompanied by a greater emphasis on ethical and responsible use, including the development of best practices, regulations, and policies to ensure that NLP is used in a way that benefits society as a whole.

Recently, a battle happened between Samsung and OpenAI regarding the unethical use of data, ChatGPT tied to Samsung's alleged data leak. check-out this story.

Overall, the future of NLP is likely to be characterized by continued advancements in technology and the development of new applications and use cases, as well as increased attention to the ethical and responsible use of this technology.

The resources/courses that you can follow to learn the NLP from beginner to advance- LLM level are shared below. you can start learning by doing

Articles

Modern Deep Learning Techniques Applied to Natural Language Processing

Visual guides to NLP concepts

Jay Alammar blog: Jay’s blog is rich in high-quality NLP posts such as The Illustrated BERT, ELMo, and co. (How NLP Cracked Transfer Learning), The Illustrated Transformer, and How GPT3 Works — Visualizations and Animations.

Tutorials

- Deep Learning for NLP with PyTorch: beginner friendly tutorial will walk you through the key ideas of deep learning programming using Pytorch. It focuses specifically on NLP for people who have never written code in any deep learning framework.

Code examples

NLP Quickbook: this is intended for practitioners to quickly read, skim, select what is useful and then proceed.

The Super Duper NLP Repo: a collection of Colab notebooks covering a wide array of NLP task implementations. It contains 300+ notebooks.

Video courses

A Code-First Introduction to Natural Language Processing (fast.ai):

Accelerated Natural Language Processing (Machine Learning University)

Coursera Natural Language Processing specialization (DeepLearning.ai: The topics covered are encoder-decoder, attention to perform advanced machine translation, text summarization, question-answering, chatbots, T5, BERT, transformers, reformer, and Hugging Face models.

Course notes

Repositories

NLP progress: a repository that tracks the progress in Natural Language Processing, including the datasets and the current state-of-the-art for the most common NLP tasks.

Awesome NLP repo: a GitHub repository containing a curated list of resources dedicated to Natural Language Processing.

Podcasts

Pradip nichite: He used to provide freelance work and provide content more focused on NLP. Discord Link you can follow.

More... to be up-to-date with the latest trendings and technological advancements in AI, follow AI Scientists/Data scientists over Linkedin, attend daily workshops/meetups by various companies, subscribe to newsletters, learn from senior data scientists, do industry-driven projects, etc.

I'm following these and many others, You can also follow these things to get started on your AI journey. You can connect with me for more details on Linkedin, Github, and Twitter. Let's learn and grow together✨

If you like this article, do show your love towards the article. always open to any suggestions🤗. follow teckbakers for more such industry-ready content.

Happy Learning!!!🥳